If it wasn’t for me, for the methods of download big files (> 64K) @msatter would have nothing to work on…

http://forum.mikrotik.com/t/how-to-download-only-one-piece-of-file-at-a-time-with-tool-fetch-and-put-it-inside-a-variable/151020/1

Nah don't worry about that. It's very obvious you have contributed the pivotal aspect of this approach/solution.

Never has anybody came up with this concept before to my knowledge, I've never seen it in any posting over the past years.

That was some clever problem solving! and you deserve full credit for this one.

Never has anybody came up with this concept before to my knowledge, I’ve never seen it in any posting over the past years.

That was some clever problem solving! and you deserve full credit for this one.

Remember, you and @jotne were my inspiration for that!

http://forum.mikrotik.com/t/importing-ip-list-from-file/143071/45

I still do not like this method of importing list on address-list without any sanitization first, and the use of on-error also do not have any sense, like on delete.

Sooner or later or for some “on-error”, or on purpose from website where the list is,

on address-list go 0.0.0.0/0 and block all, or a wrong prefix like 151.99.125.9/2 (instead of /24) block all from 128.x.x.x to 191.x.x.x

because 151.99.125.9/2 is imported on routeros like 128.0.0.0/2

http://forum.mikrotik.com/t/importing-ip-list-from-file/143071/1

I’m already working on a method than use lists >64K and sanitizing what are imported, like:

[…]

4) Create whitelist, before add the IP / IP prefix check if it is on whitelist, then if is it, no add

5) Check on add if the ip-prefix is already present inside other IP-prefix already on address-list

6) Check on add if the ip-prefix is comprehensive of one or more IP-prefix on address-list, remove old(s) and add new bigger.

7) for security accept only from /12 to /32 prefix. /11 or less on IPv4 is too much big for be true…

8 ) Set an option for put the IP on the address-list but on temporary way (Dynamic) for specified time (from 1 second to near 35 weeks),

this do not export this type of IP on address-list on export or backup

whith this option set, if the address is found again on the imported list, instead to delete it and re-import, have time resetted again (from 1 second to near 35 weeks)

[…]

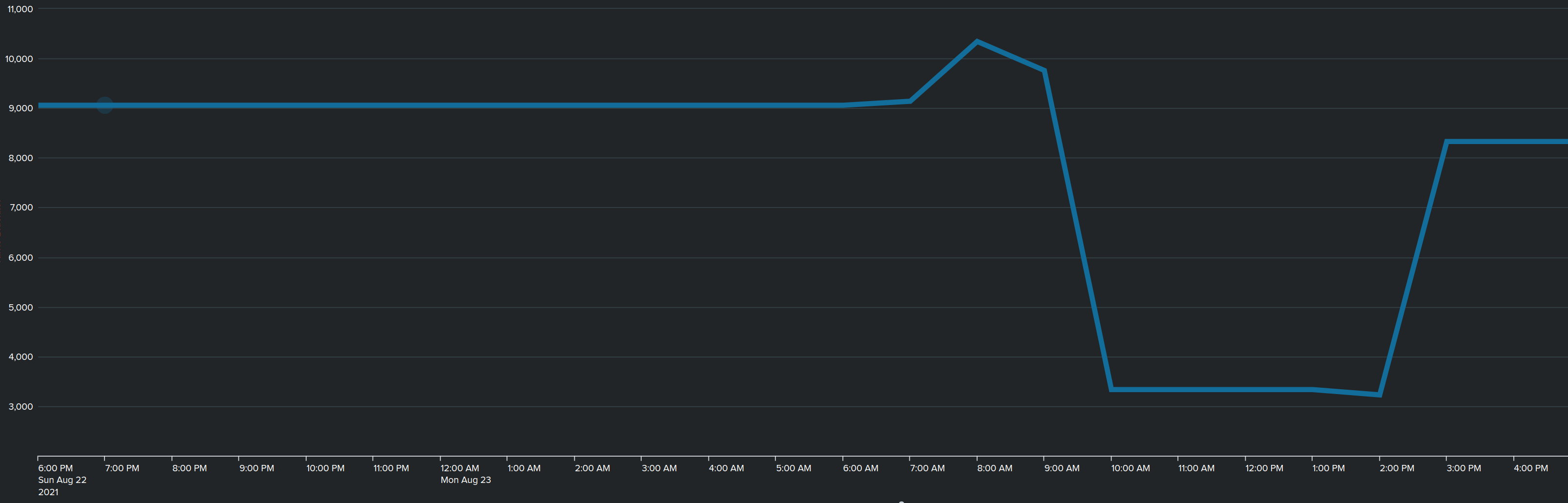

Talking about sanitizing, I did stumble today on the fact that my “Turris” list was suddenly down to only ± 3000 entries!

This happened at the time the list performs its update in the morning. Very weird.

In the afternoon I completely flushed/erased the list and started the script manually and now its up with ± 8K entries.

Not too sure what happened there, and I’ll be manually testing some more because such thing needs to be rock-solid and handle weirdness as it is ingested & processed, even it takes significantly longer.

Hmm, I’ve start the script and did see 2 entries in the log fly by : Address list <> update failed

The list seems to maintain the amount of items then before I started the script, so nothing was flushed nor is the timestamp updated.

It is a weekly list so just update it at 06H30 am and polling it will only create mor load on their side. No wonder there is now a proxy in front. ![]()

Also nice is that you can select certain kinds of list. This could be a second selector beside the IP address presence.

Legend for current Hei rules

----------------------------

amplifiers Easily exploitable services for amplification

broken_http Broken inbound HTTP (known services)

cryptocoin Cryptocoin miners

databases Database servers

dns Incoming DNS queries

http_scan HTTP/S scans

low_ports Low ports (<1024)

netbios NetBIOS

netis Netis router exploit

ntp NTP

proxy_scan Scans for HTTP/S and SOCKS proxies

remote_access Remote access services (RDP, VNC, etc.)

samba Samba (Windows shares)

sip SIP ports

ssdp SSDP

ssh SSH

synology Synology NAS

telnet Telnet

torrent Common Torrent ports

Update to the script above: added the option to use extra filtering like keywords in a RegEX like dns|sip (Hei rules) to only have those lines accepted out of the whole list. This is checked every line. If this option is omitted then every line with an valid IP address is imported.

Example:

$update url=https://project.turris.cz/greylist-data/greylist-latest.csv delimiter=, listname=turris timeout=8d heirule=dns|sip

Not really relevant I did pick up something weird. So I have the Turris list loaded and I’m also using this in the forward chain preventing any communication from inside my LAN towards any outside IP on that list.

Strangely enough I get some hits on this ;-(

The IP address 47.94.96.203 seems to go back to an IP on the list below, belonging to Alibaba Advertising Holding or something.

https://github.com/firehol/blocklist-ipsets/blob/master/iblocklist_ciarmy_malicious.netset

The problem is that it seems to originate from my NAS, on which +15 docker containers are running, 3 VM’s etc,etc.

At the moment its not very clear who initiates.

All my containers that are running are from trusted repo’s etc. No funny stuff to my knowledge. (more things like influxdb,grafana,telegraf,watchtower,mosquitto etc)

The packet towards the Alibaba IP was “ICMP” , only a single instance.

The packet towards another Turris marked IP is also found in the abuseIP database.

This packet was dropped trying to creep out of my LAN coming from “something” on my NAS, source-port 6800 > dst-port tcp/48881

I’ve tried on my Synology using tcpdump on the “docker0” bridge instance so I see a lot of action of 172.17.x.x (internal) container traffic, but I could not capture anything trying to reach the above IP’s…

Interesting … a home network ![]()

https://www.abuseipdb.com/check/45.146.165.96?__cf_chl_jschl_tk__=pmd_4oJP72tX58Arhxv3If1oLETxIH0UN7pfdr8ZsGEiXO4-1629743982-0-gqNtZGzNAeWjcnBszQil

Interesting observations ![]()

The packet towards the Alibaba IP was “ICMP” , only a single instance.

The packet towards another Turris marked IP is also found in the abuseIP database.

This packet was dropped trying to creep out of my LAN coming from “something” on my NAS, source-port 6800 > dst-port tcp/48881I’ve tried on my Synology using tcpdump on the “docker0” bridge instance so I see a lot of action of 172.17.x.x (internal) container traffic, but I could not capture anything trying to reach the above IP’s…

Interesting … a home networkInteresting observations

Do you have Solar-panels & inverter or/and using ModBus to access them? That port range is other used to discover inverters or other ModBus stuff, however normally UDP is used as broadcast.

NOTE : The mystery on the ACL-hit got solved, it turned out to be old port-forwarding for torrent-traffic that hitted the NAS (torrent client not running) but the NAS effectively replied back that IP address in stead of playing silent. No worries.

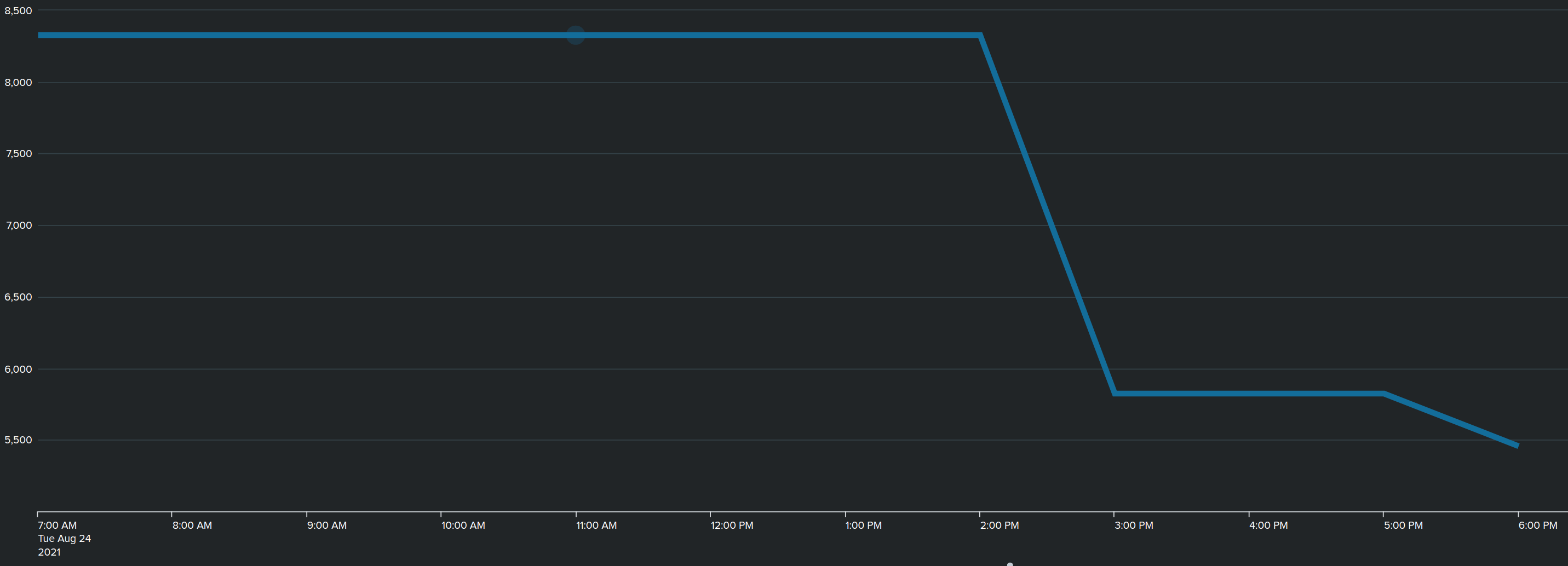

However again today the update of the list did not go well,

At 2 PM, the script starts, suddenly loosing quite some entries.

The weird thing : it remains stable for a few hours until around 5PM and I notice the list was completely emptied and was also removed. Hence no more data after 6PM

So does the latest version of the script UPDATES the dynamic timer ? Or does it create from scratch all entries that are downloaded ?

Why the heck would it start throwing out entries hours after the download…

I have trouble following you on this.

If you read about the Greylist by Turris you will see that is updated once a week. They know that. If you keep hammering the proxy they might put you in SRC-IP adress jail. ![]()

BTW you are using an old verdion of the scipt that keeps the list active for one day. I use eigth days. Seven days is the refresh and one day spare. After seven days scheduler read the new list that not refreshed by Turris for an other seven days.

Update: you’re using a version that does not remove old list and you could end up blocking addresses that are not on the list any more.

Ah, yeah you mentioned something like this earlier. I’ll adapt the timeout to 1w of each of the entries and schedule it to run 1x / week

But nevertheless, I wonder why it behaves like I see ; the “drop” in entries I can understand if I receive only partial info from their end, but why the sudden drop to “0” entries several HOURS later.

That is the part that is not clear to me, as if the dynamic entries had a short lifespan…but the script issues 1d lifetime.

hmm, yeah, lets start issuing only 1x / week and we’ll see…

Thx for pointing it out.

I have removed the first version of the script to avoid this happening to others. The first version was more a prove of concept that the Frankenstein, two parts joined, script worked.

I have added support for domain names beside IP addresses. Not tested yet but it should work.

In bold the changes an I hope the ‘+’ is supported. Else it could be replaced by a ‘*’.

:if (( $line~“[1]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}” || $line~“^.+\.[a-z.]{2,7}” ) && $line~heirule) do={

This version is replaced by the version below. That one finds out the delimiter on it's own.

0-9 ↩︎

search tag # rextended DNS RegEx

POSIX syntax:

^(([a-zA-Z0-9][a-zA-Z0-9-]{0,61}){0,1}[a-zA-Z]\.){1,9}[a-zA-Z][a-zA-Z0-9-]{0,28}[a-zA-Z]$

for MikroTik:

$line~"^(([a-zA-Z0-9][a-zA-Z0-9-]{0,61}){0,1}[a-zA-Z]\\.){1,9}[a-zA-Z][a-zA-Z0-9-]{0,28}[a-zA-Z]\$"

limited plausibly to 9 levels label+domain x9x.x8x.x7x.x6x.x5x.x4x.x3x.x2x.x1x.domain

and limited 30 characters for top domain (the longest actually existant is 24 characters XN–VERMGENSBERATUNG-PWB )

Rule for DNS names:

the format is label.domain or label2x.label1x.domain or label3x.label2x.label1x.domain etc. (ignoring never present on address list fqdn label.domain**.** )

max length for label and domain is 63 characters, but the longest domain today is 24 characters (XN–VERMGENSBERATUNG-PWB)

min length for label and domain are formerly 1 characters for label and 2 for domain

allowed characters for label and domain are case-insensitive a-z A-Z number 0-9 and the minus - (the _ are used for special cases, not for full domain name)

the first or the last (or the unique) character of label or domain can’t be -

the last (or the unique) character of label or domain can’t be a number

the first character of domain can’t be a number

the first character of label can be a number but must be followed by at least one letter

the max lengt of the string must be 253 characters

EDIT: for DNS static RegEx that not support { } use

^(([a-zA-Z0-9][a-zA-Z0-9-]*)?[a-zA-Z]\.)+[a-zA-Z][a-zA-Z0-9-]*[a-zA-Z]$

Thanks to @kcarhc

I have implemented the determination of the delimiter. This should make the import script more flexible and no need anymore to specify the different delimiters for each list.

A version that also recognizes what kind of format is used, IPv4/IPv4 with range/domain names/IPv6. A list can then only contain one type. Having to check every line will be doable but I assume the whole script would become very slow.

Update: added support for different kinds of lists. Supported are plain IPv4, IPv4 with range and domain names. I use a simple regEX for domain names.

Update 2: allows mixed lists when delimiter is set in the config line and it shows what the delimiter is. Output shows the kind of list is recognized.

:local R "[0-9]{1,3}"; # storing RegEX part in variable to have shorter strings in the code

:if ($sline ~ "^$R\\.$R\\.$R\\.$R") do={:set $posix "^$R\\.$R\\.$R\\.$R";}

:if ($sline ~ "^$R\\.$R\\.$R\\.$R/[0-9]{1,2}") do={:set $posix "^$R\\.$R\\.$R\\.$R/[0-9]{1,2}"}

:if ($sline ~ "^.+\\.[a-z.]{2,7}") do={:set $posix "^.+\\.[a-z.]{2,7}"}

A strange thing was that I could not use :local in code above and had to resort to :set

When using a defined delimiter should allow to import mixed lists. This has still be implemented and can be done by using a regEX.

New version can be found in a later posting.

Well done and nice idea…

If you want, you can use those regex for determine what type of items the file containing: DNS, IP-Prefix or only IP

search for valid DNS

(([a-zA-Z0-9][a-zA-Z0-9-]{0,61}){0,1}[a-zA-Z]\\.){1,9}[a-zA-Z][a-zA-Z0-9-]{0,28}[a-zA-Z]

IP-Prefix: IP with mandatory subnet mask

((25[0-5]|(2[0-4]|[01]?[0-9]?)[0-9])\\.){3}(25[0-5]|(2[0-4]|[01]?[0-9]?)[0-9])\\/(3[0-2]|[0-2]?[0-9])

IP or IP-Prefix if present optional subnet mask

((25[0-5]|(2[0-4]|[01]?[0-9]?)[0-9])\\.){3}(25[0-5]|(2[0-4]|[01]?[0-9]?)[0-9])(\\/(3[0-2]|[0-2]?[0-9])){0,1}

IP without prefix

((25[0-5]|(2[0-4]|[01]?[0-9]?)[0-9])\\.){3}(25[0-5]|(2[0-4]|[01]?[0-9]?)[0-9])

A new version and I think this is more or less complete now. I have also added a short explanation at the end what all the parameters do.

Update: During import is checked if the source file has changed in size and if so the import is retried after a 2 minutes wait. If no successfull import was possible you get now a specific message.

Next update: If an import failed in the end the list would be erased on forehand. Deleting is only now done on a successful import and this is possible because a all the current entries are renamed to a backup address-list. That backup address-list is removed on a successful import. I don’t change the timeout time, so the entries could timeout before the next import. So keep an eye on the log if used.

New version of this script can be found in this posting: https://forum.mikrotik.com/viewtopic.php?p=879181#p935938

A big “Thank you!” towards all contributors!

A big “Thank you!” towards all contributors!

Especially to profinet who thought of combining the two scripts to create this “Frankenstein”.

I slow my version because I want also manage fetch errors

(thanks for msatter for the idea of identify inside the type of list)

(I never see a msatter thanks to me for the method for download a file only one piece at time)

http://forum.mikrotik.com/t/fetch-capable-of-following-redirects/151723/7

This is a work in progress code for manage the fetch errors, is ready to paste on terminal for testing purpose.

/file remove [find where name="testfetch.txt"]

{

:local jobid [:execute file=testfetch.txt script="/tool fetch url=http://mikrotik.com"]

:put "Waiting the end of process for file testfetch.txt to be ready, max 20 seconds..."

:global Gltesec 0

:while (([:len [/sys script job find where .id=$jobid]] = 1) && ($Gltesec < 20)) do={

:set Gltesec ($Gltesec + 1)

:delay 1s

:put "waiting... $Gltesec"

}

:put "Done. Elapsed Seconds: $Gltesec\r\n"

:if ([:len [/file find where name="testfetch.txt"]] = 1) do={

:local filecontent [/file get [/file find where name="testfetch.txt"] contents]

:put "Result of Fetch:\r\n****************************\r\n$filecontent\r\n****************************"

} else={

:put "File not created."

}

}

on this case we obtain at the end “closing connection: <302 Found “https://mikrotik.com/”> 159.148.147.196:80 (4)”

because “http ://mikrotik.com” redirect to “https ://mikrotik.com/” (and redirect again to “https ://www.mikrotik.com/”) (added spaces on purpose)