Has anyone tried to get GPT4 to write a script for Mikrotik? I wonder how it’s going? I can’t try - I’m in Russia, I don’t have VDS abroad.

I wonder when GPT will be able to replace Rex ? ![]()

ChatGPT is as good at writing ROS scripts as with any other things: mostly it gets things done (surprisingly well), but sometimes it fails miserably … the problem with ChatGPT failing is not that it’s failing, the problem is that it doesn’t admit that it cant provide a good result, instead it presents some hallucinations.

And that can happen with ROS scripts as well. And when an inexperienced user stumbles upon such a hallucination and it then fails to execute it on ROS, people are quick to blame ROS (being buggy).

So, no, IMO Rex won’t be replaced by AI any time soon.

I cannot say much about GPT4, maybe paid version is better, but in my experience GPT3.5 fails miserably in most of the cases, it just keeps imagining things that do not exist in the real world and it is not just with ROS scripts.

I only tried GPT3.5 This neural network, of course, cannot write the final script, but it can significantly reduce the time for a non-professional in writing scripts by providing ready-made code snippets upon request, instead of searching for them on forums. Considering that GPT4 is an order of magnitude better, perhaps the time is not far off when it will be possible to receive ready-made scripts from GPT.

And, if Rex teaches her, then… ![]()

This is what GPT gave in 5 seconds when asked to contact a specific device with a specific OID. Yes, it’s simple, but it replaces 10 minutes of my time writing and checking syntax.

# settings

:local deviceIP "192.168.1.1"

:local snmpCommunity "public"

:local relayOID "1.3.6.1.4.1.1234.1.1.1.1.1"

# function

:global snmpSet do={

/tool snmp-set oid=$1 value=$2

}

# example

$snmpSet $relayOID 1 oid-type=integer device=$deviceIP community=$snmpCommunity

good luck using that code ![]()

I agree, this is not a good example.

And this is what GPT gave when asked to “write a script to search for active routes on the Internet”:

:put [/ip route print where dst-address~"0.0.0.0/0"]

It’s better, isn’t it?

And you’re seriously comparing GPT’s ROS scripting skills with Rex? Oh my… we need more cats.

The issue is you have to know scripting well enough to spot and fix errors. If you can do that, it’s kinda easier to just write the script. But if you don’t know scripting, it’s impossible to tell a right and wrong answer, making it less useful than just searching here or the Mikrotik’s Tips and Tricks doc.

Now I’ll say ChatGPT is very good with BIND9 DNS configuration…tried that out a while back. But BIND has 30+ years of largely unchanging examples. Similarly with JavaScript. But there just isn’t enough data for RouterOS scripts to train on (e.g. basically whatever is in r/mikrotik etc in reddit) nor a corpus of books etc on RouterOS scripting.

I suspect it uses an LSP in training (which vscode, vi etc use to check syntax) which most common languages have, but there is not an LSP for Mikrotik so there 0 chance for it validate in training.

Thank you very much Amm0 for your answer.

Jokes aside, the release of GPT5 is just around the corner. In addition, you need to clearly understand that what developers are now giving away online and what they are already giving in practice are two different things, because they are controlled by wars.

Namely, the military gets all the latest scientific developments in any country, no matter how much we want it.

In addition, private capital presupposes the existence of any well-funded non-governmental developments in the person of mad scientists, no matter how many.

Just remember television and the atomic nucleus. What can be done in the field of science, God alone knows.

It’s good that not all electronic libraries, including medical libraries, are yet accessible to neural networks. Everything about the programs is clear here.

I think, unfortunately, this will not pass, and in 10 years programmers in the modern sense of the word will cease to exist.

And the problems will begin when the machine consistently writes working code, and the programmer needs to check the computer.

How to give only the programmer the decision to launch a program - this is where our end begins.

Perhaps the timing of my forecasts is even too optimistic. And either humanity will stop working on instruments for its own destruction and find means of protection or…

Rex, being a very smart person, understood all this and stopped writing scripts for Mikrotik, not wanting to train the neural network. ![]() In this sense, I most like the famous story by Cyril Kornblatt “Gomez” (if you haven’t read it, I recommend reading it).

In this sense, I most like the famous story by Cyril Kornblatt “Gomez” (if you haven’t read it, I recommend reading it).

While being impressed by AI success it’s important to keep in mind that AI can only combine[*] knowledge it absorbed during training. The (perceived) quality of this combinatorial process does get better with newer AI generations (so yes, GPT5 will mostly give better answers than GPT4 does). But what AI did not learn, it can’t output. So lack of useful MT scripting examples will still plague newer GPTs … And we’ve yet to see an AI which can actually invent something completely new (that would be Rex 2.0 ![]() ).

).

[*] in certain cases, the fuzziness of combinatorial process doesn’t matter or is even a good thing. Such as writing an essay, using certain words instead of other when they have similar meaning might mean that GPT has “a personal writing style”. When predicting path of a hurricane in Carribean sea, it doesn’t matter if the predicted path runs 20km east or west as long as it doesn’t hit Florida (but it’s OK if it hits Cuba). But in digital domain, result is either right or not. If GPT produces a ROS script, a blend of v6 an v7 -izms is not OK, script has to be exactly right (it has to be letter A, not a blurred something which might resemble a letter A but it might resemble a Koala as well).

Despite the fact that the AI’s success is impressive, it is important to keep in mind that the AI can only combine [*] the knowledge it has gained during training.

Don’t think like that. Regarding GPT4, he can, for example, search for knowledge on the Internet, climbing into any online manuals and documents (well, for now, where he is allowed, because there is a fairly rigid filter on it). I slipped the paid version of GPT4 the same tasks that GPT 3.5 gave and got a qualitatively new final working code. I will provide these data soon. Regarding Mikrotiks and scripts - yes, Rex may not need GPT yet, but this is only for now. And we have almost one Rex (well, or let there be 5 or 50 of them in the world, it doesn’t matter). Very soon, if only new versions of GPT will be shared at all, the neural network will write very good scripts in seconds.

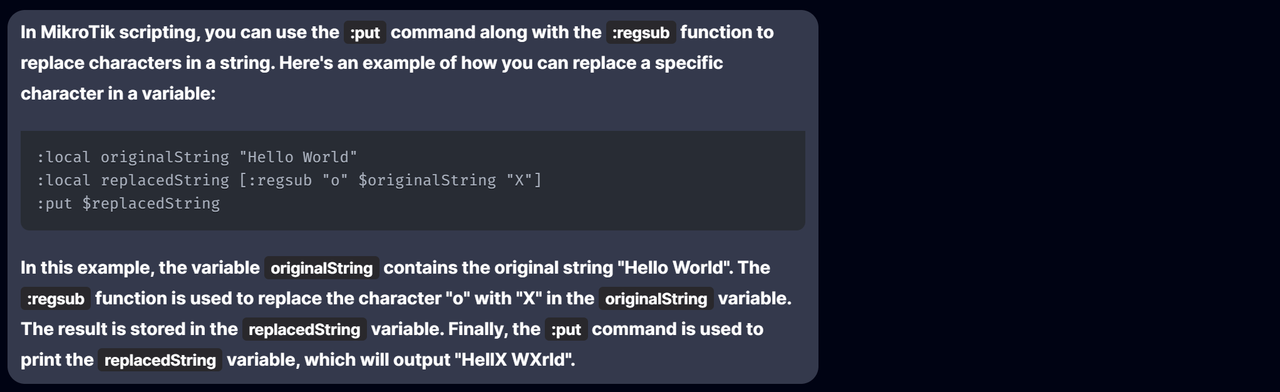

ChatGPT search: “mikrotik scripting replace character”.

Response:

:regsub ???

![]()

![]()

![]()

![]()

Well, the fact it can train itself in (near) real time using on-line materials doesn’t change the fact that it can only give back something similar to what already exists. But again, “similar” is not good enough in a world where something is either right or wrong (and “almost right” isn’t enough).

So no novel ideas from GPT … yet ![]()

@mkx

Believers will keep on believing.

I have the impression someone is not willing to listen to reason because of tunnel vision.

My personal view not affected by any AI tool …

Besides, if I ask ChatGPT itself, this is what I get.

So who to believe??? ![]()

Will chatgpt be able to write a perfect program without any errors ?

ChatGPT

No, ChatGPT, like any other tool or software, is not guaranteed to write perfect programs without errors. While it can assist with generating code and providing suggestions, it’s important to note that programming often requires a deep understanding of the problem at hand, the programming language, and the specific requirements.ChatGPT might help you draft code, explain concepts, or provide guidance, but it’s crucial for developers to review and test the generated code thoroughly. Writing bug-free and efficient code involves careful consideration of various factors, and the responsibility ultimately lies with the programmer to ensure the correctness and reliability of the code.

Additionally, programming involves creative problem-solving, and code written by AI may lack the intuition and context that a human programmer brings to the task. Therefore, while ChatGPT can be a useful tool, it’s not a substitute for human expertise and diligence in software development.

The last paragraph of ChatGPT-generated text is, IMO, the crux of the whole ordeal.

Long live rextended! ![]()

I didn’t want to intervene on the issue so as not to influence and see where it would end up, but…

I am very flattered that I am continually cited as an example and antagonist against ChatGPT…

Thank you all…

No, gentlemen, it’s not like that. I gave GPT 3.5 three tasks (write a script for searching for active routes on the Internet, including marked ones; write a script for turning on a relay through the Laurent 5G module; write a script for transcoding the Cyrillic alphabet to send text to a Telegram bot) and it failed them without making any mistakes in the designs, but making mistakes in syntax or providing non-existent syntax. GPT 4, having received the same tasks, asked for clarifications and was not mistaken more than once, ultimately producing absolutely working code. In the case of relay control, he independently found a manual for this module on the Internet and took the necessary http get request from there.

Believe or not believe is your business. I have all the screenshots of the GPT4 responses and will use them in my article that will be published soon. Sane people do not believe, but check for themselves. I would like to emphasize that I used the paid version of GPT4, it is much better than the free one.